Early this year, Detroit police arrested Robert Williams — a Black man living in a Detroit suburb — on his front lawn in front of his wife and two little daughters (ages 2 and 5). Robert was hauled off and locked up for nearly 30 hours. His crime? Face recognition software owned by Michigan State Police told the cops that Robert Williams was the watch thief they were on the hunt for.

There was just one problem: Face recognition technology can’t tell Black people apart. That includes Robert Williams, whose only thing in common with the suspect caught by the watch shop’s surveillance feed is that they are both large-framed Black men.

But convinced they had their thief, Detroit police put Robert William’s driver’s license photo in a lineup with other Black men and showed it to the shop security guard, who hadn’t even witnessed the alleged robbery firsthand. The shop security guard — based only on review of a blurry surveillance image of the incident — claimed Robert was indeed the guy. With that patently insufficient “confirmation” in hand, the cops showed up at Robert’s house and handcuffed him in broad daylight in front of his own family.

It wasn’t until after spending a night in a cramped and filthy cell that Robert saw the surveillance image for himself. While interrogating Robert, an officer pointed to the image and asked if the man in the photo was him. Robert said it wasn’t, put the image next to his face, and said “I hope you all don’t think all Black men look alike.”

One officer responded, “The computer must have gotten it wrong.” Robert was still held for several more hours, before finally being released later that night into a cold and rainy January night, where he had to wait about an hour on a street curb for his wife to come pick him up. The charges have since been dismissed.

The ACLU of Michigan is lodging a complaint against Detroit police, but the damage is done. Robert’s DNA sample, mugshot, and fingerprints — all of which were taken when he arrived at the detention center — are now on file. His arrest is on the record. Robert’s wife, Melissa, was forced to explain to his boss why Robert wouldn’t show up to work the next day. Their daughters can never un-see their father being wrongly arrested and taken away — their first real experience with the police. Their children have even taken to playing games involving arresting people, and have accused Robert of stealing things from them.

As Robert puts it: “I never thought I’d have to explain to my daughters why daddy got arrested. How does one explain to two little girls that a computer got it wrong, but the police listened to it anyway?”

One should never have to. Lawmakers nationwide must stop law enforcement use of face recognition technology. This surveillance technology is dangerous when wrong, and it is dangerous when right.

First, as Robert’s experience painfully demonstrates, this technology clearly doesn’t work. Study after study has confirmed that face recognition technology is flawed and biased, with significantly higher error rates when used against people of color and women. And we have long warned that one false match can lead to an interrogation, arrest, and, especially for Black men like Robert, even a deadly police encounter. Given the technology’s flaws, and how widely it is being used by law enforcement today, Robert likely isn’t the first person to be wrongfully arrested because of this technology. He’s just the first person we’re learning about.

That brings us to the second danger. This surveillance technology is often used in secret, without any oversight. Had Robert not heard a glib comment from the officer who was interrogating him, he likely never would have known that his ordeal stemmed from a false face recognition match. In fact, people are almost never told when face recognition has identified them as a suspect. The FBI reportedly used this technology hundreds of thousands of times — yet couldn’t even clearly answer whether it notified people arrested as a result of the technology. To make matters worse, law enforcement officials have stonewalled efforts to obtain documents about the government’s actions, ignoring a court order and stonewalling multiple requests for case files providing more information about the shoddy investigation that led to Robert’s arrest.

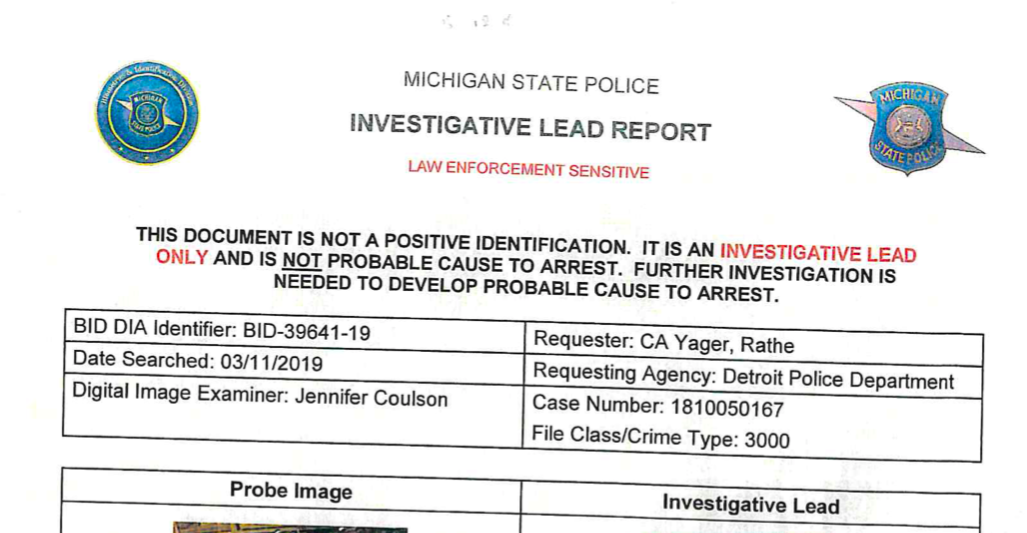

Third, Robert’s arrest demonstrates why claims that face recognition isn’t dangerous are far-removed from reality. Law enforcement has claimed that face recognition technology is only used as an investigative lead and not as the sole basis for arrest. But once the technology falsely identified Robert, there was no real investigation. On the computer’s erroneous say-so, people can get ensnared in the Kafkaesque nightmare that is our criminal legal system. Every step the police take after an identification — such as plugging Robert’s driver’s license photo into a poorly executed and rigged photo lineup — is informed by the false identification and tainted by the belief that they already have the culprit. They just need the other parts of the puzzle to fit. Evidence to the contrary — like the fact that Robert looks markedly unlike the suspect, or that he was leaving work in a town 40 minutes from Detroit at the time of the robbery — is likely to be dismissed, devalued, or simply never sought in the first place. And when defense attorneys start to point out that parts of the puzzle don’t fit, you get what we got in Robert’s case: a stony wall of bureaucratic silence.

Fourth, fixing the technology’s flaws won’t erase its dangers. Today, the cops showed up at Robert’s house because the algorithm got it wrong. Tomorrow, it could be because a perfectly accurate algorithm identified him at a protest the government didn’t like or in a neighborhood in which someone didn’t think he belonged. To address police brutality, we need to address the technologies that exacerbate it too. When you add a racist and broken technology to a racist and broken criminal legal system, you get racist and broken outcomes. When you add a perfect technology to a broken and racist legal system, you only automate that system’s flaws and render it a more efficient tool of oppression.

It is now more urgent than ever for our lawmakers to stop law enforcement use of face recognition technology. What happened to the Williams’ family should not happen to another family. Our taxpayer dollars should not go toward surveillance technologies that can be abused to harm us, track us wherever we go, and turn us into suspects simply because we got a state ID.